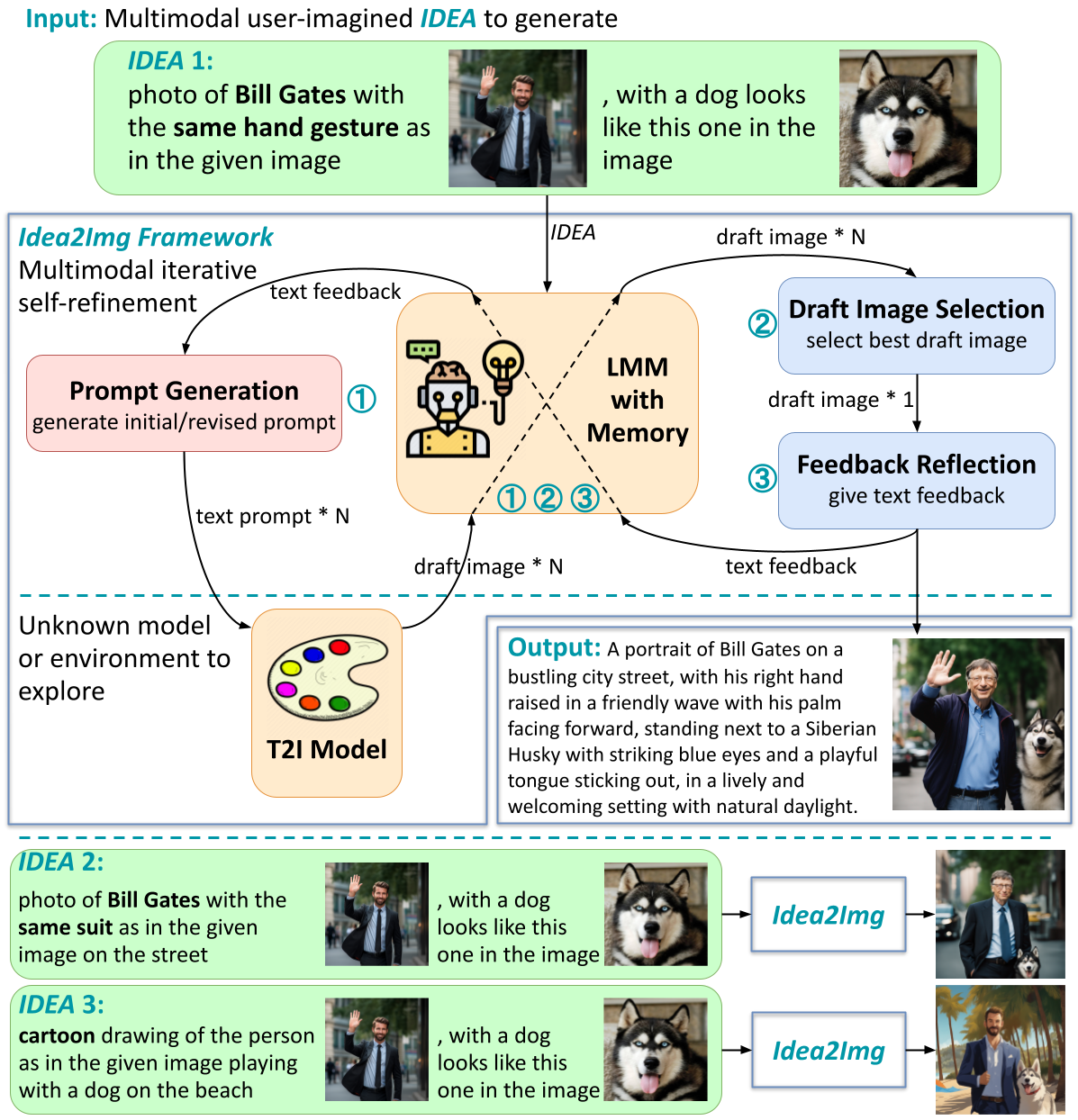

We introduce “Idea to Image”, a system that enables multimodal iterative self-refinement with GPT-4V(ision) for automatic image design and generation. Humans can quickly identify the characteristics of different text-to-image (T2I) models via iterative explorations. This enables them to efficiently convert their high-level generation ideas into effective T2I prompts that can produce good images. We investigate if systems based on large multimodal models (LMMs) can develop analogous multimodal self-refinement abilities that enable exploring unknown models or environments via self-refining tries. Idea2Img cyclically generates revised T2I prompts to synthesize draft images, and provides directional feedback for prompt revision, both conditioned on its memory of the probed T2I model’s characteristics. The iterative self-refinement brings Idea2Img various advantages over base T2I models. Notably, Idea2Img can process input ideas with interleaved image-text sequences, follow ideas with design instructions, and generate images of better semantic and visual qualities. The user preference study validates the efficacy of multimodal iterative self-refinement on automatic image design and generation.

Idea2Img involves an LMM, GPT-4V(ision), interacting with a T2I model to probe its usage for automatic image design and generation. Idea2Img takes GPT-4V for improving, assessing, and verifying multimodal contents.

Idea2Img framework enables LMMs to mimic humanlike exploration to use a T2I model, enabling the design and generation of an imagined image specified as a multimodal input IDEA.

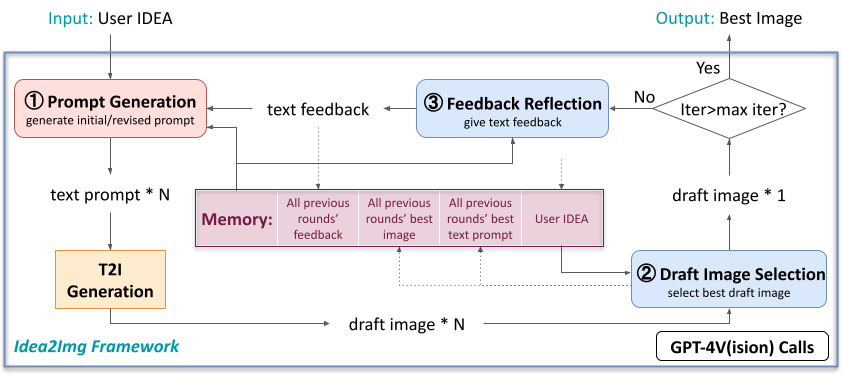

We overview of the Idea2Img’s full execution flow blow. More details can be found in our paper.

Idea2Img applies LMMs functioning in different roles to refine the T2I prompts. Specifically, they will (1) generate and revise text prompts for the T2I model, (2) select the best draft images, and (3) provide feedback on the errors and revision directions. Idea2Img is enhanced with a memory module that stores all prompt exploration histories, including previous draft images, text prompts, and feedback.

Flow chart of Idea2Img’s full execution flow.

Click each panel below for the zoomed in view.

@article{yang2023idea2img,

title= {Idea2Img: Iterative Self-Refinement with GPT-4V(ision) for Automatic Image Design and Generation},

author={Yang, Zhengyuan and Wang, Jianfeng and Li, Linjie and Lin, Kevin and Lin, Chung-Ching and Liu, Zicheng and Wang, Lijuan},

journal={arXiv preprint arXiv:2310.08541},

year= {2023},

}

We are deeply grateful to OpenAI for providing access to their exceptional tool. We also extend heartfelt thanks to our Microsoft colleagues for their insights, with special acknowledgment to Faisal Ahmed, Ehsan Azarnasab, and Lin Liang for their constructive feedback.

This website is adapted from Nerfies, licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.